AI 104: The Perfect Storm – Why AI Exploded, and What Else is Under the Hood

-

Artificial Intelligence

-

Feb 18

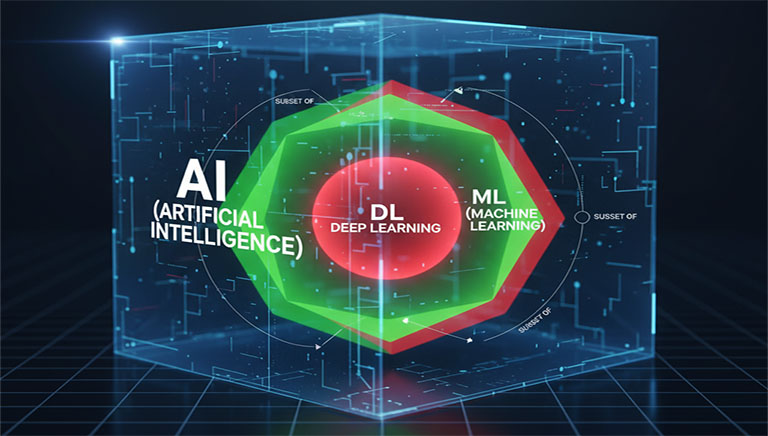

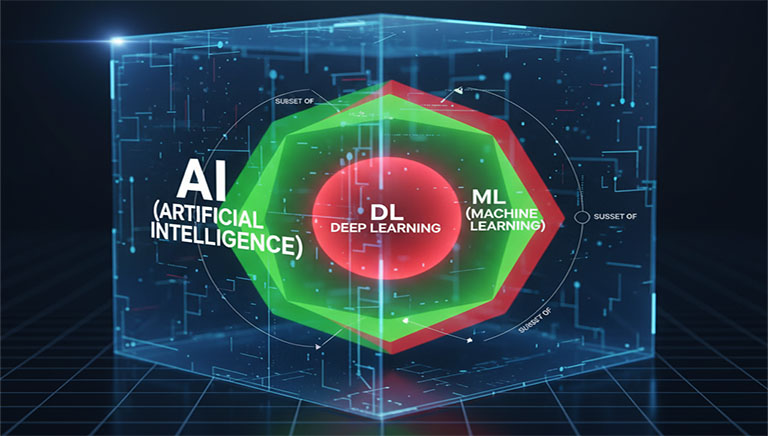

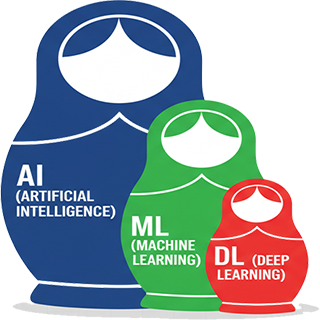

In our previous posts, we laid the groundwork: clarifying the Matryoshka doll relationship between AI, ML, and DL, and then dissecting the academic definition of 'intelligence' from a rational agent perspective. Now, it's time to address a critical question: If the idea of AI has been around for decades, why has it suddenly dominated headlines and reshaped industries in the last 10-15 years?

This final part of our Artificial Intelligence Series will explore the confluence of factors that ignited the current AI revolution and then broaden our perspective to understand that AI is far more diverse than just Machine Learning, encompassing a rich tapestry of approaches that continue to be relevant.

Section 1: The Perfect Storm – Why AI is Booming Now

The history of AI is marked by "winters" – periods of reduced funding and interest due to unfulfilled promises. What ended the last AI winter and fueled the unprecedented surge we're witnessing today? The answer lies in a powerful synergy of three key advancements:

1) Big Data: The Fuel for Learning Algorithms

Modern Machine Learning algorithms are incredibly data-hungry. Without vast amounts of relevant data, they simply cannot learn effectively. The last two decades have seen an exponential explosion in data generation:

- The Internet and Social Media: User-generated content, web pages, and interaction logs provide immense textual and visual data.

- Smartphones and Mobile Devices: Every photo, video, text message, and location datum contributes to the global data pool.

- Internet of Things (IoT): Billions of connected devices—from smart home appliances to industrial sensors—constantly stream environmental, operational, and behavioral data.

This deluge of data, often unstructured and diverse, became the indispensable fuel that allowed ML and DL models to move from theoretical curiosities to practical powerhouses.

2) Computational Power: The Engine of Deep Learning (GPUs)

Deep Learning models, with their complex, multi-layered neural networks, require immense computational resources. Training these models involves billions of mathematical operations, primarily matrix multiplications and additions.

Historically, general-purpose Central Processing Units (CPUs) were too slow for this. The game-changer was the realization that Graphics Processing Units (GPUs), originally designed to render complex 3D graphics for video games, were exceptionally good at performing these exact types of parallel computations simultaneously.

The adoption of GPUs in AI research significantly reduced training times from months or weeks to days or even hours, enabling rapid experimentation and the development of much larger, more sophisticated models. This hardware advancement was pivotal in making Deep Learning feasible at scale.

3) Algorithmic Advancements & Open-Source Tools: The Blueprint and Infrastructure

While some core neural network ideas have existed for decades, significant algorithmic breakthroughs and optimizations were crucial. Architectures like:

- Convolutional Neural Networks (CNNs): Revolutionized image processing (e.g., AlexNet's 2012 ImageNet victory).

- Recurrent Neural Networks (RNNs) & LSTMs: Essential for sequential data like natural language and time series.

- Transformer Architecture: The breakthrough behind modern large language models (LLMs) like GPT and BERT, allowing for highly efficient parallel processing of sequential data.

Coupled with these, the rise of open-source software libraries and frameworks (TensorFlow, PyTorch, Keras, Scikit-learn) democratized AI development. Researchers and developers worldwide could leverage state-of-the-art tools without rebuilding everything from scratch, accelerating innovation exponentially.

These three factors—data, hardware, and algorithms/tools—formed a virtuous cycle, each feeding and accelerating the others, leading to the current AI renaissance.

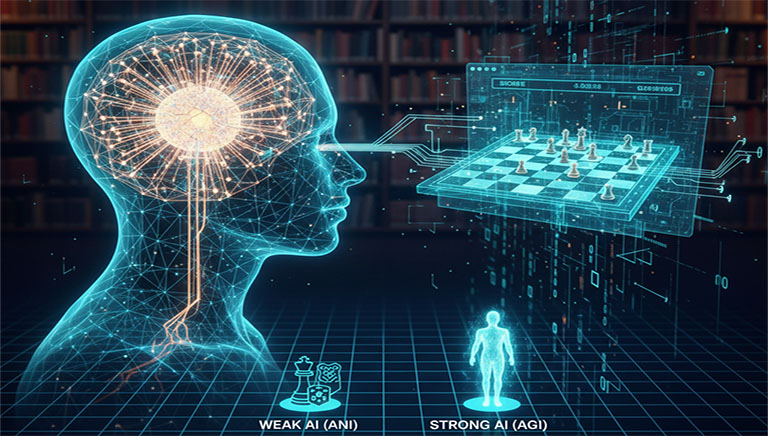

Section 2: Beyond the Hype – The Broader Landscape of AI

While Machine Learning and Deep Learning dominate current discourse, it's crucial to remember they are powerful subsets within the vast field of Artificial Intelligence. Many other significant and historically important branches of AI continue to solve complex problems where ML might not be the most appropriate or efficient solution. Understanding these broadens our perspective on what "intelligence" in a machine can truly mean.

1) Symbolic AI (GOFAI - Good Old-Fashioned AI):

This approach, dominant in the 1970s and 80s, focuses on explicit knowledge representation and logical reasoning. Instead of learning from data, systems are programmed with rules, facts, and logical inference mechanisms.

- Expert Systems: These were prominent, encoding human expert knowledge (e.g., medical diagnosis rules, financial advice) into IF-THEN statements. They provided clear, explainable decisions.

- Knowledge Representation: Logic-based systems used formal languages (like First-Order Logic) to represent knowledge and deduce new facts.

Why it's still relevant: For tasks requiring strict logical consistency, traceability, and explainability (e.g., legal reasoning, formal verification), symbolic AI still offers advantages. Modern research in Neuro-Symbolic AI attempts to combine its reasoning power with ML's pattern recognition.

2) Search and Planning Algorithms:

These are foundational to AI, particularly for problem-solving and decision-making in discrete spaces.

- Pathfinding Algorithms (e.g., A* search): Used in GPS, video games (NPC movement), and logistics to find the most efficient path between two points.

- Game Playing (e.g., Minimax, Alpha-Beta Pruning): Algorithms that explore future moves in games like chess to find the optimal strategy. AlphaGo, while using Deep Learning, still heavily relies on tree search.

- Automated Planning: Creating a sequence of actions to achieve a specific goal (e.g., a robot assembling a product, an AI controlling spacecraft maneuvers).

Why it's still relevant: These algorithms are essential for structured problem-solving where objectives are clear and actions are discrete.

3) Evolutionary Computation:

Inspired by biological evolution and natural selection, these algorithms search for optimal solutions by iteratively evolving a population of candidates.

- Genetic Algorithms: Mimic processes like mutation, crossover, and selection to find solutions to optimization and search problems.

- Genetic Programming: Extends genetic algorithms to evolve computer programs themselves.

Why it's still relevant: Excellent for complex optimization problems, design automation, and situations where the search space is too vast for exhaustive search or gradient-based methods.

4) Fuzzy Logic:

Unlike classical Boolean logic (true/false, 0/1), fuzzy logic deals with degrees of truth, allowing for concepts like "somewhat hot" or "partially true."

Why it's still relevant: Ideal for control systems that need to make decisions based on imprecise or subjective inputs, often found in consumer electronics (washing machines, air conditioners) and industrial control.

Conclusion: The End of the AI Series, The Beginning of Your Journey

This exploration has revealed that the current AI boom is not merely a sudden stroke of genius but the culmination of decades of research converging with unprecedented data and computational power. It also reminds us that "AI" is a rich and diverse academic field, with many powerful paradigms beyond just the currently dominant Machine Learning approaches. Each method has its domain of applicability and continues to contribute to the grand goal of artificial intelligence.

We've covered the foundational concepts of Artificial Intelligence, from its core definitions and philosophical underpinnings to the technological forces driving its current surge, and the broader landscape of its diverse subfields. My hope is that this series has provided you with a robust, academic, yet engaging foundation to understand AI more deeply.

This concludes our dedicated "Artificial Intelligence Series." Thank you for joining me on this journey!

Announcement: I plan to create an external Blogger site and write my blog posts there. Of course, the link will be in my personal site's menu. Since I find the site's built-in Blogger clunky and cumbersome, I'll be switching to Blogger.

3 Comments Found

Anthony Miller

Great final post to the series.

Recep Yılmaz

This article is incredibly misleading. You spend half of it talking about 'Symbolic AI' and 'Search Algorithms'? That's just a bunch of If-Then statements and A* search. That's not 'AI,' that's just... programming. Real AI is Deep Learning and Transformers. By mixing up old-school calculators with actual learning models, you're confusing your readers and devaluing what real AI researchers are doing.

Burak Duman

AuthorHi Recep, thank you for sharing your perspective. However, the premise of your criticism—that 'real AI' is only Deep Learning—is the exact misconception this entire academic series was designed to correct.

The field of 'Artificial Intelligence' was formally established at the 1956 Dartmouth Conference, which was entirely dedicated to the concepts you're dismissing as 'just programming' (Symbolic AI, search algorithms, logic). These are the foundational pillars of the entire discipline, as defined in every major computer science curriculum.

Deep Learning and Transformers are indeed the most recent and powerful tools in the AI toolbox, but they are not the only tools. To claim that 'Search Algorithms' (like the one that powers your GPS) or 'Expert Systems' (that assist in medicine) are not AI is to ignore the literal history and breadth of the field.

What you are calling 'misleading' is, in fact, the standard academic definition. What you are calling 'real AI' is simply the newest branch of it. I hope the series helps clarify this important distinction.

Zahir Agarwal

The 'Perfect Storm' section was a perfect explanation of why AI is suddenly everywhere.